Large language models (LLMs) are powerful AI tools designed to generate natural-sounding text in response to specific prompts. The best-known example of an LLM is ChatGPT, which skyrocketed in popularity in late 2022, powered by OpenAI’s GPT models. Since then, the landscape of LLMs has expanded with even more advanced options.

Choosing the right LLM for your specific use case is critical for successful AI implementation in businesses. While ChatGPT remains a popular choice, it is far from the only solution available. Modern LLMs, such as Google’s Gemini 2.0, Anthropic’s Claude 3, Grok3 and Meta’s LLaMA 3, offer unique strengths that may better fit your business’s needs depending on the task, cost considerations, and customization requirements.

For example:

- Google Gemini 2.5 specializes in multitasking, large-document analysis, and enterprise-scale operations with unmatched context window sizes.

- Anthropic’s Claude 3 models excel in long-form content generation and nuanced reasoning, with Sonnet supporting up to 200,000 tokens.

- Meta’s LLaMA 3 provides a flexible open-source solution for businesses seeking cost-effective customization.

- Grok3 provides modes for handling complex tasks (Big Brain Mode) and excels at advanced problem solving.

A carefully selected LLM can empower employees, boost efficiency, improve accuracy, and help businesses make better, more informed decisions. Understanding the strengths of each model and how they align with your goals can provide a significant competitive edge in today’s AI-driven world.

What to Consider When Choosing an LLM

Selecting the right Large Language Model (LLM) for your business depends on a variety of factors. Each model offers unique strengths, so it’s essential to evaluate them based on your specific requirements. Below, we outline the key considerations and provide examples of the latest models to help guide your decision.

| Consideration | Description | Questions to Ask Yourself |

|---|---|---|

| Use Case | Define the tasks the model must perform, such as generating long-form content, summarizing documents, or analyzing data. | – What tasks do I need the model to perform (e.g., summarization, creative writing, data analysis)? – Do I need multimodal features such as image or audio support? |

| Performance & Capabilities | Assess the model’s ability to handle complex prompts, reasoning accuracy, and specific strengths (e.g., coding, research). | – Which LLM has demonstrated the best performance for my specific tasks? – How well does it handle domain-specific questions? |

| Knowledge Cutoff | Check how recent the training data is and whether the model supports real-time browsing for up-to-date knowledge. | – Is the model trained on recent data? – Does it support browsing for real-time updates? |

| Customizability | Determine whether the model allows fine-tuning or personalized settings to meet your business needs. | – Can the LLM be customized to reflect my brand’s tone or specific needs? – Does it offer tools for fine-tuning? |

| Context Window | The maximum input length the model can process in a single prompt, critical for large documents or datasets. | – How much text can the model process at once? – Do I need to analyze large datasets or documents? |

| Pricing | Consider the cost of the model, including free tiers, API pricing, and enterprise plans. | – What is my budget for AI implementation? – Do I need a cost-effective option for frequent usage? |

Check TeamAI’s Model Wizard to See if You Need a Multi-LLM Approach

The best LLMs to choose from

Now that we’ve covered how you can choose the best LLM for you, you might be wondering exactly what your options are. Plenty of choices are available to you, but we’ll introduce some of the best ones below.

OpenAI’s Models

GPT-4.5

GPT-4.5 is the latest iteration of OpenAI’s language models, building on the success of GPT-4. It offers enhanced reasoning capabilities, faster processing, and supports multimodal tasks including text, image, and limited audio inputs. GPT-4.5 also features larger context windows, up to 128,000 tokens for enterprise-level users, making it ideal for handling extensive datasets.

When businesses should consider GPT-4.5

Businesses needing advanced reasoning, industry-specific solutions, or multimodal support will find GPT-4.5 the right fit. However, its higher cost may limit its use for simpler applications.

GPT-3.5

GPT-3.5 remains a reliable and cost-effective option. While less powerful than GPT-4.5, it is faster and can handle simpler tasks effectively. It also supports context windows up to 16,000 tokens, making it a flexible solution for smaller projects.

When businesses should consider GPT-3.5

Businesses with budget constraints or simpler use cases that don’t require the advanced capabilities of GPT-4.5 should consider GPT-3.5.

Google’s Models

Gemini 2.0 Pro

Google’s Gemini 2.0 Pro is a cutting-edge model optimized for multitasking, document-heavy workflows, and coding. It supports enterprise use cases by handling up to 2 million tokens—currently the largest context window in the industry. Gemini 2.0 Pro excels in tasks requiring extensive data analysis and reasoning.

When businesses should consider Gemini 2.0 Pro

Enterprises needing to process large datasets or perform complex multitasking should consider Gemini 2.0 Pro. It’s ideal for technical workflows and enterprise-scale applications.

Gemini Flash Lite

Designed as a cost-effective solution, Gemini Flash Lite is tailored for simpler tasks like summarization, translation, or straightforward text generation. It is an affordable choice for startups and small businesses.

When businesses should consider Gemini Flash Lite

Businesses with limited budgets or simpler requirements for AI-assisted content generation should choose Gemini Flash Lite.

Anthropic’s Models

Claude 3 Instant

Anthropic’s Claude 3 Instant is a competitively priced model comparable to OpenAI’s GPT-3.5. It’s well-suited for tasks like text generation, basic data analysis, and document summarization. With a context window of 100,000 tokens, it offers better input-handling capabilities than most entry-level models.

When businesses should consider Claude 3 Instant

Organizations seeking a budget-friendly yet capable model for general-purpose text tasks should consider Claude 3 Instant.

Claude 3 Sonnet

Claude 3 Sonnet is a mid-tier option designed for long-form content generation and detailed analysis. With its 200,000-token context window, it’s particularly effective for document-heavy workflows like legal or scientific analysis.

When businesses should consider Claude 3 Sonnet

Businesses handling complex projects that require processing large volumes of text, such as research or legal documentation, should consider Claude 3 Sonnet.

Claude 3 Opus

As Anthropic’s premium offering, Claude 3 Opus is tailored for intricate, high-stakes tasks requiring deep reasoning and advanced problem-solving capabilities. It builds on Sonnet’s features, making it ideal for enterprise applications.

When businesses should consider Claude 3 Opus

Enterprises dealing with advanced problems or requiring the highest level of performance and reasoning should opt for Claude 3 Opus.

xAI’s Grok Models

Grok-1

Grok-1 is xAI’s initial offering, comparable to early GPT models. It’s suited for tasks like basic question answering, information retrieval, and simple coding assistance. With a context window of 8,192 tokens, it offers reasonable input-handling capabilities for introductory tasks. It is a 314 billion parameter Mixture-of-Experts (MoE) model trained from scratch.

When businesses should consider Grok-1

Organizations seeking an open-source, foundational model for experimentation and basic NLP tasks should consider Grok-1.

Grok-2

Grok-2 is a mid-tier option designed for improved chat capabilities, coding assistance, and reasoning tasks. With its enhanced architecture and performance, it’s particularly effective for users seeking a more versatile AI assistant. It outperforms Claude and GPT-4 on the LMSYS leaderboard and excels in vision-based tasks.

When businesses should consider Grok-2

Businesses handling projects that require a balance of speed and quality in various text and vision-related tasks should consider Grok-2.

Grok-3

As xAI’s premium offering, Grok-3 is tailored for intricate, high-stakes tasks requiring deep reasoning, advanced problem-solving capabilities, and real-time data integration.

It builds on Grok-2’s features, making it ideal for demanding technical fields. Grok-3 leverages DeepSearch for step-by-step reasoning and Big Brain Mode for complex tasks.

It utilizes a massive 200,000 H100 GPU cluster, delivering 10x the computational power of its predecessor.

Grok 3 also has a “Think” mode for adjusting cognitive effort and a “mini” version for faster responses.

When businesses should consider Grok-3

Enterprises dealing with advanced problems or requiring the highest level of performance, real-time information, and transparent reasoning should opt for Grok-3, especially with a SuperGrok subscription.

Grok-3 particularly shines in software development, scientific research, and education, offering automated coding, advanced data analysis, and engaging STEM learning tools.

TeamAI is model-agnostic – Find the best for your use case.

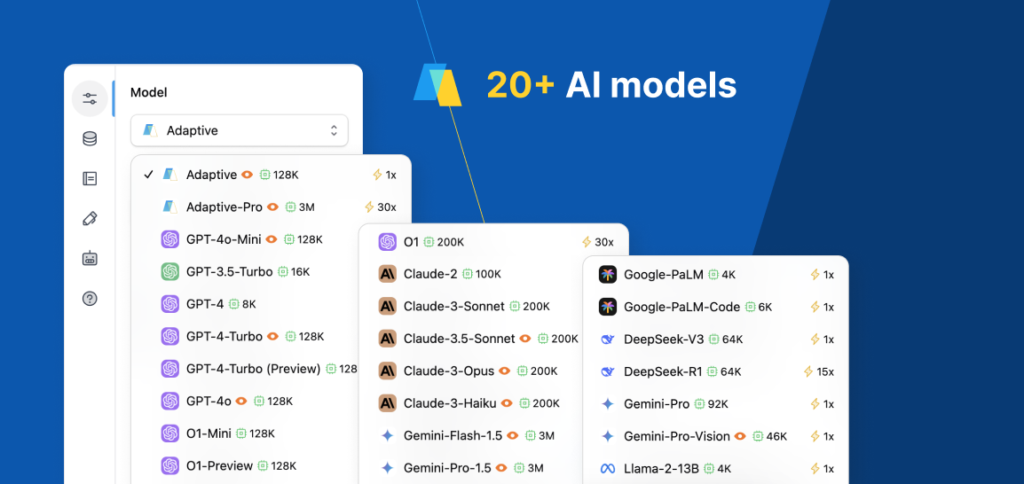

The LLMs listed above are just a few options in TeamAI. With so many options out there competing with one another, it can take time to choose the best one. That’s why you can benefit from circumventing that problem altogether.

With a generative AI solution like TeamAI, you don’t have to worry about which LLM is best. That’s because TeamAI is model-agnostic, which means that if a new LLM rises as the best next week, TeamAI can switch to that model without any hassle.

Get Started With TeamAI