ChatGPT is an application from OpenAI that interacts with advanced Large Language Models (LLMs) to drive generative artificial intelligence. These models work together to understand language inputs and generate meaningful outputs.

ChatGPT offers a user-friendly interface for working with LLMs to accomplish numerous tasks. The application includes multiple versions, which provide updated functionality, including advanced speed and better accuracy. Knowing the differences helps you identify the best choice for your task.

Terms to know

Here are some terms to know to better understand the details in this article:

- Tokens: This term represents the smallest unit into which text data can be broken down for AI to process. Depending on the text, a token may include characters, words, or phrases. A general rule of thumb for ChatGPT is that 1 token is about 4 characters in English.

- Context window: This term refers to the number of tokens preceding and following a specific word or character. The context window incorporates user prompts

and AI responses from recent user history to make its responses more relevant. - Latency: This term refers to the time it takes for the AI to process a prompt and generate a response.

GPT-3.5 Turbo

This version is one of the older ChatGPT models. While newer varieties exist, GPT-3.5 Turbo is still beneficial for its fast responses and inexpensive cost. It can understand and generate natural language and code.

While GPT-3.5 Turbo is still a high-quality model, its main limitations come from its less recent information, smaller context window and output limit.

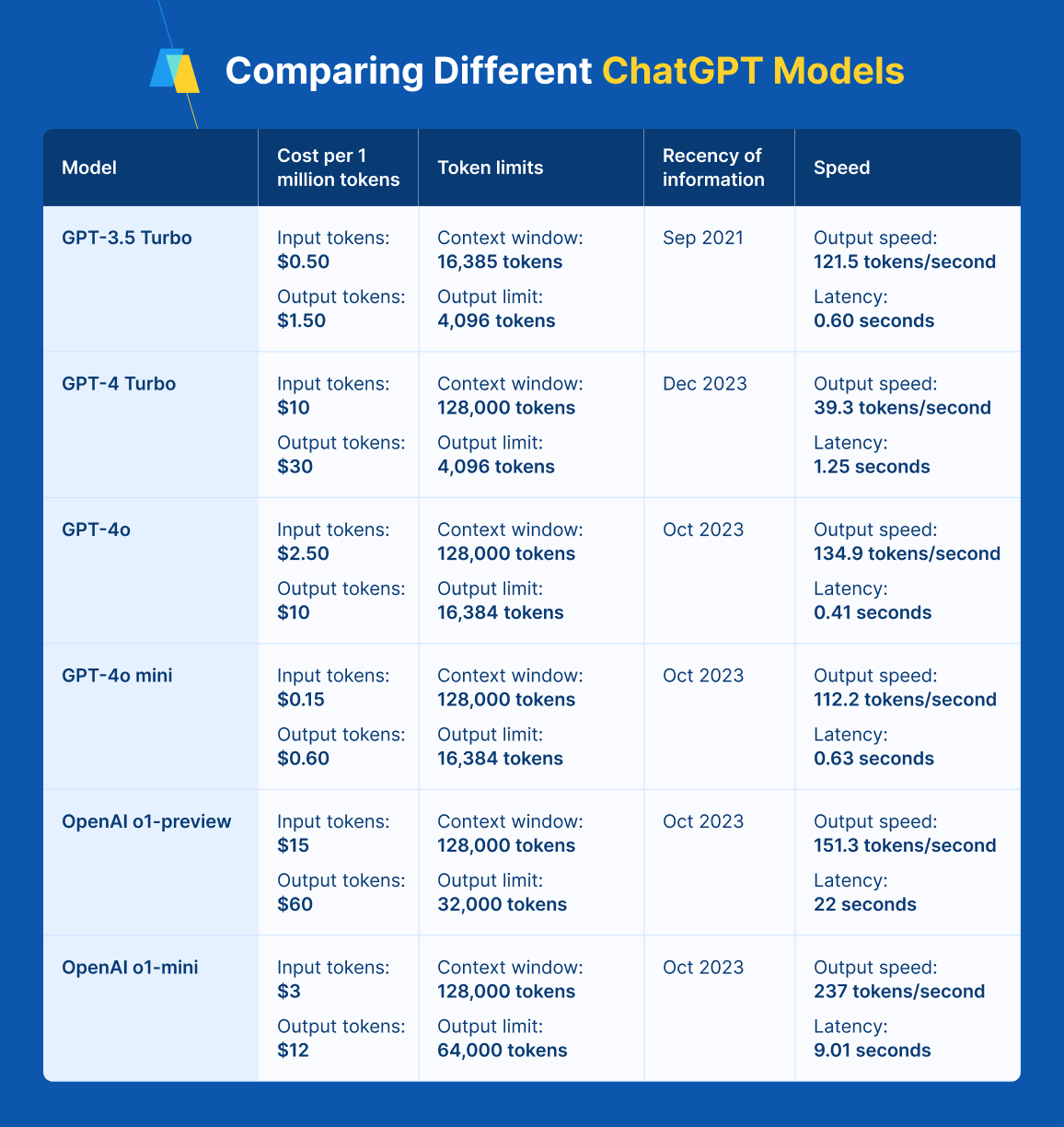

Here’s an overview of GPT-3.5 Turbo according to a few factors:

- Cost: The latest version of GPT-3.5 Turbo is relatively cost-effective, with pricing of $0.50 per 1 million tokens of input and $1.50 per 1 million tokens of output.

- Token limits: This model has a context window of 16,385 tokens and a maximum output of 4,096 tokens.

- Recency of information: This model operates off of knowledge collected before September 2021.

- Output quality: While GPT-3.5 Turbo is less nuanced and conversational than other models, it still provides acceptable output quality.

- Speed: This model is faster than average, with an output of 121.5 tokens per second and a latency of 0.60 seconds.

GPT-4 Turbo

This model is an optimized, large-scale technology offering high intelligence for your tasks. It includes GPT-4 Vision, which makes it multimodal, accepting image and text inputs.

GPT-4 Turbo delivers excellent processing power and solves complex scientific and mathematical problems. Its responses are more nuanced than earlier models, more accurate, and less prone to hallucinations. Here’s an overview of a few key details:

- Cost: The price is $10 per 1 million input tokens and $30 per 1 million output tokens.

- Token limits: The model has a context window of 128,000 tokens and an output limit of 4,096 tokens.

- Recency of information: GPT-4 Turbo takes its information from an enhanced selection of diverse internet sources from December 2023 and earlier.

- Output quality: This model serves high-demand applications, contributing knowledgeable responses to inputs and queries.

- Speed: This model’s added functionality slows its speed, with an output of 39.3 tokens per second and a latency of 1.25 seconds.

GPT-4o and GPT-4o mini

These are the latest, biggest, and best models released by OpenAI. They have high intelligence and the ability to complete complex, multistep tasks. Like GPT-4 Turbo, they include multimodal input options, such as text and images.

Their data extraction, classification, and verbal reasoning capabilities are much more advanced than those of earlier ChatGPT models. See how they compare to other ChatGPT versions:

- Cost: GPT-4o costs $2.50 per 1 million input tokens and $10 per 1 million output tokens. GPT-4o mini costs $0.15 per 1 million input tokens and $0.60 per 1 million output tokens.

- Token limits: The context window is 128,000 tokens, and the max output limit is 16,384.

- Recency of information: These models use specialized datasets and knowledge from October 2023 and earlier.

- Output quality: These models provide the highest-quality outputs among current ChatGPT versions, especially for complex tasks.

- Speed: GPT-4o has an output speed of 134.9 tokens per second and a latency of 0.41 seconds, making it faster than previous models. The mini is slightly slower, with an output speed of 112.2 tokens per second and a latency of 0.63 seconds.

If speed and accuracy matter, GPT-4o is the best GPT model for your needs. If you prefer lower costs but still want the latest functions, the GPT-4o mini is an excellent option.

OpenAI o1-preview and o1-mini

While these don’t fall under the ChatGPT name, they are the latest releases from OpenAI. They are still in their beta versions, but overall, these models strive for quality over speed, thinking through problems before responding.

These enhanced reasoning capabilities help you solve complex problems in science, coding, math, and other areas. These models also have enhanced safety features.

OpenAI o1-mini is a faster, more cost-effective version of o1-preview. It’s optimized for STEM topics, with more limited factual knowledge in other areas, such as dates and biographies. In non-STEM areas, it is comparable to earlier models like GPT-4o mini.

Here’s how they compare to earlier versions:

- Cost: OpenAI o1-preview is quite a bit more expensive than other models. It costs $15 per 1 million input tokens and $60 per 1 million output tokens. OpenAI o1-mini costs $3 per 1 million input tokens and $12 per 1 million output tokens, slightly more than GPT-4o.

- Token limits: Like the two previous models, these models have a context window of 128,000 tokens. OpenAI o1-preview has an output limit of 32,000 tokens, and o1-mini of 64,000 tokens, higher than the 4o models.

- Recency of information: These models use the same knowledge cutoff as 4o and 4o-mini of October 2023.

- Output quality: These models offer the highest output quality of any OpenAI product for STEM tasks. In other areas, they are comparable to earlier versions.

- Speed: The output speed of o1-preview is comparable to GPT-4o at 151.3 tokens per second, but its added reasoning time leads to a higher latency of about 22 seconds. The o1-mini has an output speed of 237 tokens per second and a latency of 9.01 seconds. Its output speed and latency are higher than average.

o3-mini and o3 Series

Released in early 2025, these models represent OpenAI’s latest advancement in reasoning-focused AI technology. The o3 Series builds upon the success of the o1 models while offering improved cost efficiency and specialized capabilities for STEM applications

These models excel at complex problem-solving tasks, particularly in scientific and mathematical domains, while maintaining better cost efficiency than their o1 predecessors. The o3-mini variant provides a more accessible entry point to advanced reasoning capabilities without compromising core functionality.

Here’s how these models compare to other versions:

- Cost: The o3 Series offers improved cost efficiency compared to o1 models, with o3-mini providing the most economical access to advanced reasoning capabilities.

- Token limits: Both models maintain the standard 128,000 token context window, with o3 offering a 48,000 token output limit and o3-mini providing 32,000 tokens.

- Recency of information: These models include knowledge up to December 2024, with specialized focus on STEM-related content.

- Output quality: While maintaining high standards across all tasks, these models particularly excel in STEM applications, offering enhanced reasoning capabilities for scientific and mathematical problems.

- Speed: The o3 Series introduces improved processing efficiency, with response times averaging 25% faster than o1 models while maintaining sophisticated reasoning capabilities.

The o3 Series represents a significant step forward in specialized AI processing, particularly for organizations focused on scientific and technical applications. The o3-mini variant makes this technology more accessible to smaller teams and individual developers while maintaining core STEM capabilities

GPT-4.5 (Orion) (announced)

This model represents OpenAI’s latest advancement in non-chain-of-thought processing, marking a significant milestone as the company’s final model in this category. Announced in early 2025, GPT-4.5 (Orion) bridges the gap between traditional language models and the upcoming chain-of-thought processing systems planned for GPT-5.

GPT-4.5 combines the best features of both GPT-4 Turbo and the o-series models, offering enhanced processing capabilities while maintaining faster response times than the o-series. It’s designed to provide more accurate and nuanced responses while reducing the computational overhead associated with chain-of-thought processing.

Here’s how GPT-4.5 compares to other versions:

- Cost: Pricing details are pending release, but early announcements suggest it will be positioned between GPT-4 Turbo and o1-preview in terms of cost structure.

- Token limits: The model features an expanded context window of 256,000 tokens and an output limit of 32,000 tokens.

- Recency of information: This model includes knowledge up to January 2025, making it the most current model in OpenAI’s lineup.

- Output quality: Early testing shows significant improvements in accuracy and reasoning capabilities compared to GPT-4 Turbo, while maintaining faster response times than o-series models.

- Speed: While specific performance metrics are yet to be released, OpenAI has indicated that GPT-4.5 will offer better speed-to-quality ratio than previous models.

As OpenAI’s last non-chain-of-thought model, GPT-4.5 represents a crucial stepping stone toward more advanced AI systems. Its release, expected within weeks of its February 12, 2025 announcement, will provide users with enhanced capabilities before the transition to GPT-5’s revolutionary chain-of-thought architecture

GPT-5 (announced)

This model represents OpenAI’s most ambitious advancement yet, unifying their o-series and GPT-series models into a single integrated system. Announced in February 2025, GPT-5 marks a significant shift in OpenAI’s approach to AI model deployment, introducing tiered intelligence levels that adapt to different user needs.

GPT-5 incorporates o3 technology and advanced reasoning capabilities, offering unprecedented performance across various tasks. The model introduces a new approach to AI accessibility, with different intelligence levels available through subscription tiers.

Here’s how GPT-5 compares to other versions:

- Cost: Pricing varies by intelligence tier, with free users accessing standard intelligence settings and Plus/Pro users getting higher intelligence levels. Specific API pricing details are pending release.

- Token limits: Unconfirmed

- Recency of information: This model includes knowledge up to January 2025, making it the most current model in OpenAI’s lineup.

- Output quality: Early testing shows significant improvements in reasoning capabilities and multimodal processing, with enhanced accuracy across both general and specialized tasks.

- Speed: Unconfirmed

The integration of o3 technology and the unified model approach makes GPT-5 particularly suitable for complex tasks requiring advanced reasoning and multimodal processing. Its tiered intelligence system ensures accessibility for general users while providing enhanced capabilities for professional applications

Which model is the best for you?

Each OpenAI model has advantages and disadvantages, so the ideal choice varies based on the use case. For example, a complex STEM-related task will benefit from the latest OpenAI o1-preview.

On the other hand, a more generalized task where you want a quick answer will benefit from one of the GPT-4o models. If cost is a major determining factor, you may choose GPT-3.5 Turbo or GPT-4o mini.

Other AI models, including Gemini and Claude, respectively developed by Google DeepMind and Anthropic, bring additional differences that make them better options for certain applications than ChatGPT.

For example, Gemini is known for multimodality across text, video, image, audio, and code. Claude excels in safety and misuse prevention and is also beneficial for natural conversations.

Discover the Advantages of Using Multiple LLMs

Get all the best AI models in one

OpenAI delivers numerous models, all with different benefits and use cases. Besides OpenAI, other publishers have created exceptional AI chatbots with unique functionality. If you want the flexibility to operate and harness different models, try TeamAI.

This platform utilizes the latest and best LLMs, including ChatGPT 4o, OpenAI o1-preview, Gemini 4 and Claude-3. You gain over 20 options in a single workspace without managing multiple API keys.

All you need to do is open a workspace and select the desired model from the Chat Controls sidebar. You can even engage TeamAI’s Adaptive model to automatically select the best model for each prompt.

Sign up for a TeamAI account to experiment with multiple models in one place.