Claude is a generative artificial intelligence (AI) chatbot made by Anthropic. This AI research company based in San Francisco creates products focusing on safety and the long-term benefit of humanity.

Claude models are known for their nuanced reasoning and detailed analysis capabilities compared to similar AI chatbots. Claude has several versions with varying accuracy, speed, and cost. Explore the differences between them to more knowledgeably select the right one for your next task.

Claude Instant 1.2 and Claude 2: The Early models

These are some of Anthropic’s earlier models. They are now considered legacy options, with the Claude 3 family recommended for most tasks.

Claude Instant 1.2 is the predecessor of Claude Haiku, built for efficient processing. Claude 2 and 2.1 were the antecedents to Claude 3, with the second version improving accuracy.

These early Claude varieties are valued for their easy conversations and safety, as they are harder to prompt to dangerous or offensive output than products from other developers. They also stand out among similar AI chatbots for their coding skills.

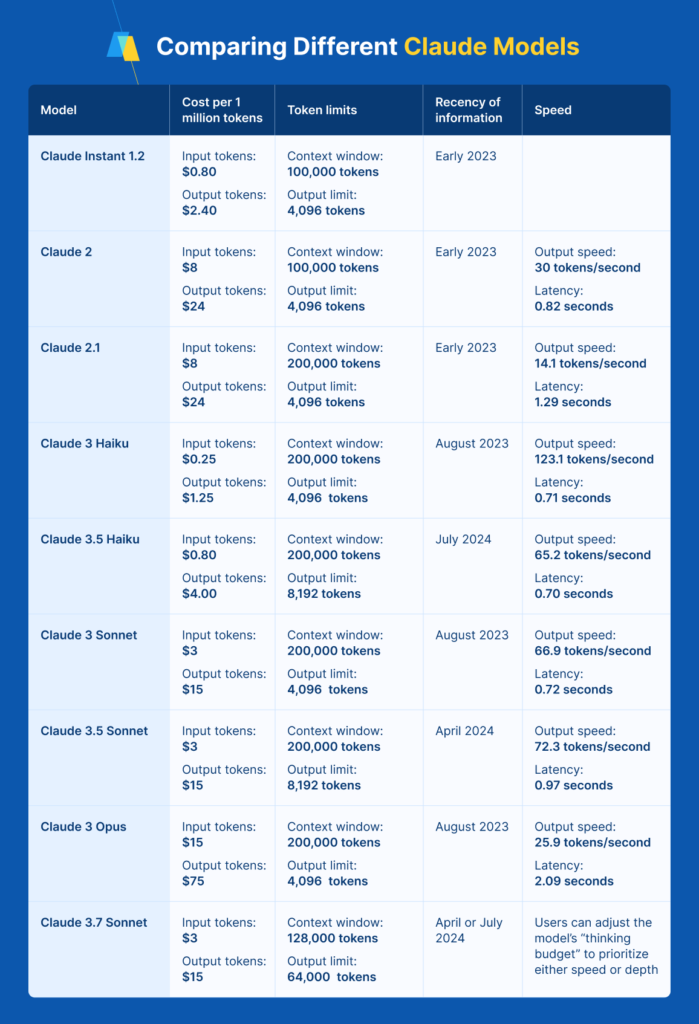

Here’s an overview of these models’ capabilities:

- Cost: Claude Instant 1.2 costs $0.80 per million token input and $2.40 per million token output. Both Claude 2 models are significantly more expensive, costing $8.00 per million token input and $24.00 per million token output.

- Context window: Claude Instant 1.2 and Claude 2 have a window of 100,000 tokens, while Claude 2.1 has a 200,000 token window. The max output of all versions is 4,096 tokens — or about 3,100 words.

- Knowledge cutoff: The training data for all three models cuts off in early 2023, meaning other products are a better choice for tasks requiring the latest information.

- Output quality: These models all deliver strong performance, but Claude Instant 1.2 prioritizes speed and cost-effectiveness primarily, while Claude 2 focuses more on performance.

- Speed: These models are slower than similar counterparts in the Claude 3 family. Claude 2 has an average output of 30 tokens per second and a latency of 0.82 seconds. Claude 2.1 has a slower output of 14.1 tokens per second and a higher latency of 1.29 seconds.

Claude 3 Haiku and Claude 3.5 Haiku: Fastest models

These models are the fastest and most compact in Anthropic’s Claude 3 family. They work well for simple queries, delivering results almost instantly. The responses mimic human interaction well. Claude 3.5 is the next generation of Claude’s fastest model. It brings increased accuracy, surpassing Claude 3 Opus in certain contexts.

See how the Haiku models compare in key characteristics:

- Cost: Claude 3 Haiku is cheaper than any previous Claude version, costing $0.25 per million token input and $1.25 per million token output. Claude 3.5 Haiku is slightly more expensive, at $0.80 per million input tokens and $4.00 per million output tokens. This slight added price brings an extensive increase in quality.

- Context window: Like Claude 2.1, both Haiku models have a context window of 200,000 tokens. The max output of Claude 3 Haiku is 4,096 tokens, while 3.5 Haiku doubles this output at 8,192 tokens — or about 6,200 words.

- Knowledge cutoff: Claude 3 Haiku has slightly more recent knowledge than earlier models, with a cutoff in August 2023. Claude 3.5 Haiku uses the most recent information of any existing Claude AI chatbot, with a cutoff of July 2024.

- Output quality: While the Haiku models are most known for their speed, they still bring accuracy and intelligence to tasks.

- Speed: Both Haiku models are significantly faster than Anthropic’s earlier versions. Claude 3 Haiku has an output of 123.1 tokens per second and a latency of 0.71 seconds. Claude 3.5 Haiku generates 65.2 tokens per second and has a latency of 0.70 seconds.

Claude 3 Sonnet and Claude 3.5 Sonnet: Strong all-around performers

Those seeking a balance of performance and accuracy will gain the most value from Claude Sonnet. Claude 3 Sonnet works well for enterprise applications, offering excellent performance while managing costs.

You can utilize it for everything from customer support to complex coding tasks. Claude 3.5 Sonnet is Anthropic’s most intelligent release while maintaining fast speeds.

Compare Sonnet to other Anthropic models:

- Cost: The increased performance comes with a higher cost compared to Haiku — $3.00 per million input tokens and $15.00 per million output tokens. However, this cost is still cheaper than the earlier Claude 2 models.

- Context window: Like all other Claude 3 and 3.5 models, Sonnet operates with a context window of 200,000 tokens. The max output is the same for all three models, at 4,096 tokens, while the 3.5 model operates at 8,192 tokens.

- Knowledge cutoff: The knowledge cutoff for 3 Sonnet is the same as 3 Haiku and 3 Opus. Claude 3.5 Sonnet has a slightly earlier cutoff than 3.5 Haiku of April 2024.

- Output quality: Sonnet models balance quality and speed, making them a good general-purpose choice, especially for high-use applications like data processing or sales tasks. Claude 3.5 Sonnet brings significant quality improvements while still maintaining fast speeds.

- Speed: The increase in quality slightly decreases the speed of Sonnet compared to Haiku. Claude 3 Sonnet has an output speed of 66.9 tokens per second and a latency of 0.72 seconds. Claude 3.5 Sonnet has an output of 72.3 tokens per second and a latency of 0.97 seconds.

Claude 3 Opus: Effective for highly complex tasks

Claude 3 Opus is the most intelligent member of the Claude 3 family. It performs well on highly complex tasks, such as open-ended prompts. Compared to other Claude 3 models, it brings enhanced fluency and a more human-like understanding.

Its performance is summarized below:

- Cost: This model is Anthropic’s most expensive at $15.00 per million input tokens and $75.00 per one million output tokens.

- Context window: Opus has the same context window as all Claude 3 and 3.5 models, with 200,000 million input tokens and a maximum output of 4,096.

- Knowledge cutoff: Like other Claude 3 iterations, Opus has a knowledge cutoff of August 2023.

- Output quality: This version is ideal for tasks where quality and detail matter. Potential applications include advanced strategy and detailed research reviews.

- Speed: Since speed is not a priority for this model, it is slower than Haiku or Opus. Its output speed is 25.9 tokens per second, and its latency is 2.09 seconds. It spends time thinking through prompts before offering a response.

Claude 3.7 Sonnet: Best for Advanced Reasoning and Versatility

Claude 3.7 Sonnet is Anthropic’s latest addition, designed to deliver a new level of reasoning and adaptability. This model introduces an innovative feature called “extended thinking mode,” allowing users to toggle between quick, concise responses and detailed, step-by-step analysis tailored to complex tasks. It combines the strengths of earlier Sonnet models with improved precision, making it ideal for both general-purpose and high-level applications.

Here’s how Claude 3.7 Sonnet compares to other Anthropic models:

- Cost: Claude 3.7 Sonnet maintains the standard pricing structure of $3.00 per million input tokens and $15.00 per million output tokens. For extended reasoning tasks, additional “thinking tokens” are factored into the cost, allowing users to balance speed, quality, and budget.

- Context window: With a context window of up to 128,000 tokens, Claude 3.7 Sonnet provides extensive capacity for handling large inputs. By default, it supports a maximum output of 64,000 tokens, empowering users to work with more extensive and detailed prompts.

- Knowledge cutoff: While not explicitly confirmed, Claude 3.7 Sonnet’s knowledge cutoff is expected to align with or surpass the dates of earlier 3.5 models, such as April or July 2024.

- Output quality: This release builds upon previous Sonnet models with enhanced reasoning and coding capabilities. It performs exceptionally well in advanced tasks like math problem-solving, competitive programming, and detailed research reviews. Claude 3.7 Sonnet consistently achieves state-of-the-art results on benchmarks, including SWE-bench Verified and TAU-bench.

- Speed: Users can adjust the model’s “thinking budget” to prioritize either speed or depth. In standard mode, it delivers rapid, high-quality responses, while extended thinking mode ensures highly detailed, well-reasoned answers. This flexibility makes it an excellent choice for both quick-turnaround tasks and intricate problem-solving.

Claude 3.7 Sonnet’s hybrid reasoning capabilities make it one of the most versatile models in Anthropic’s lineup. Whether you need fast answers, in-depth analysis, or a balance of the two, this model offers exceptional performance and adaptability for a wide range of use cases.

How to choose the right model

With the many Claude models available, you may wonder about the right choice for each task. Anthropic has made the process slightly more manageable by dividing its most recent creations into speed, accuracy, and cost categories.

Haiku models have the most efficiency with the lowest cost, while Opus is the most intelligent with a higher cost. Sonnet balances speed, intelligence, and price. You can decide which makes the most sense for your task depending on the characteristics you value most.

Comparing the characteristics of Claude products might give you the best choice from Anthropic, but what’s the overall best AI chatbot for your task? Numerous publishers have created AI chatbots, such as OpenAI and Google DeepMind.

ChatGPT is known for its versatile design and skills in creative writing tasks, while Gemini provides strong real-time data analysis capabilities. Comparing models from different creators will help you find the best one for each task.

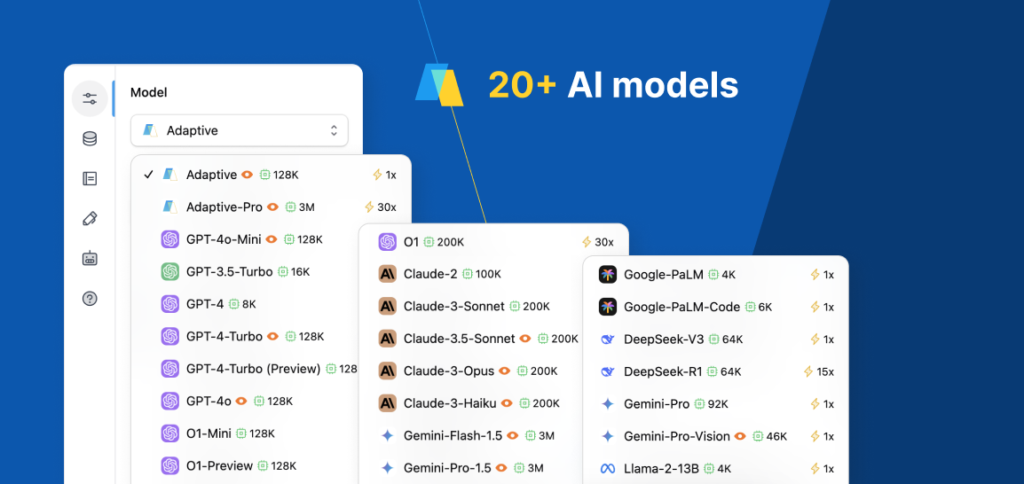

Use all the best AI chatbots

Gain the flexibility to operate all the best AI chatbots in one place with TeamAI. This platform combines the latest models from all the leading developers.

You can access Claude 3.5 Sonnet, OpenAI o1-preview, Gemini 4, and more in a single platform with no need to juggle multiple API keys. Using TeamAI, you can choose the model you want from the Chat Controls sidebar or even use TeamAI’s Adaptive model that will pick for you based on the prompt.

Start your account to see what you can do with access to multiple models in just a few clicks.