As AI systems like ChatGPT become more advanced and widely adopted, proper governance and oversight must be in place to ensure these technologies are deployed safely, ethically, and effectively. Though AI promises many benefits, it also has risks that must be proactively addressed.

When implementing AI like ChatGPT or TeamAI, organizations should have transparent processes and safeguards to ensure quality assurance. One crucial step is continuously monitoring outputs for accuracy, relevance, and potential harm.

Organizations can monitor AI systems by establishing human review processes where employees are trained to assess outputs for issues and provide feedback to refine the system.

Generative AI cannot operate on a set-it-and-forget-it basis — the tools need constant oversight. Companies can start by looking for ways to automate the review process by collecting metadata on AI systems and developing standard mitigations for specific risks.

Furthermore, companies should have clear ethical guidelines for the appropriate use of AI, provide training for employees on the risks and limitations, and implement access controls. Providing rigorous oversight, instituting feedback loops, ensuring high-quality training data, and establishing ethical guidelines are essential to unlocking the potential of AI like ChatGPT while proactively addressing its risks.

With the right quality assurance and governance, businesses can confidently integrate these innovative technologies to drive value.

Benefits and Risks of AI in Business

The emergence of advanced AI systems like ChatGPT represents a pivotal moment, unlocking new possibilities while introducing complex challenges. There is tremendous excitement about how these technologies could transform industries – from streamlining business operations to enhancing customer engagement.

However, there are also legitimate concerns about the risks posed by AI if deployed without sufficient oversight.

Businesses are responsible for ensuring that they’re using this technology ethically and mitigating potential harm.

The stakes are high for realizing AI’s benefits and proactively addressing its risks.

Organizations must approach these systems thoughtfully, committed to establishing governance, implementing ethical practices, and prioritizing quality assurance. Careful oversight is required to ensure AI alignment with corporate values, compliance with regulations, and, most importantly, a positive impact on society.

Let’s start by understanding the limitations of ChatGPT and Generative AI generally:

Understanding ChatGPT and Generative AI’s Limitations

While ChatGPT represents an impressive technological achievement, it is critical to recognize its limitations. As advanced as ChatGPT may seem, it must possess accurate intelligence and common sense. There are critical constraints around its knowledge, potential for bias, and accuracy that users must consider.

Some of the fundamental limitations are:

- ChatGPT’s knowledge is limited by its training data, which only covers information up until 2021.

- ChatGPT faces challenges around potential biases in its training data.

- ChatGPT and generative AI can sometimes generate responses that seem convincing but are factually inaccurate.

While ChatGPT is an engineering feat, users cannot fully trust its outputs. There are inherent constraints around its knowledge, potential for bias, and accuracy. Setting appropriate expectations and verifying its information is crucial to using it responsibly. With vigilance of its limitations, ChatGPT can aid human intelligence, but not a replacement.

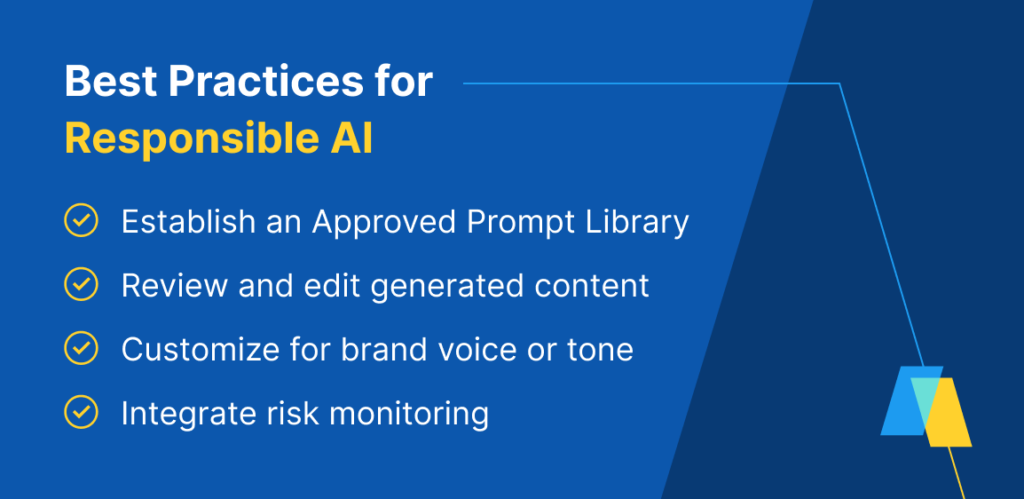

Implementing Best Practices for Responsible AI

While AI systems like ChatGPT have immense potential, organizations must establish best practices to ensure these technologies are deployed responsibly. Your business must have best practices and standard operating procedures laid out for employees.

Specific steps can help promote responses that are helpful, harmless, and honest from AI systems:

- Establish an Approved Prompt Library – Vague or poorly constructed prompts are more likely to return poor or even harmful responses.

- Review and edit generated content – Human oversight is critical. Generative AI cannot operate without direct human oversight. Reviewing outputs allows for catching errors.

- Customize for brand voice or tone – Fine-tuning the system for the needs of your specific business enhances relevance. This helps ensure alignment with brand identity.

- Integrate risk monitoring – Create a process for monitoring the risks, including oversight over the AI responses and team usage of AI. Create data-driven analytics to monitor this effectively.

A multi-layered approach encompassing human oversight, risk monitoring, training refinement, and customization is required to ensure AI acts as an empowering agent rather than an unchecked, autonomous force. With concerted effort, organizations can implement robust guardrails to promote AI’s helpful, harmless, and honest use.

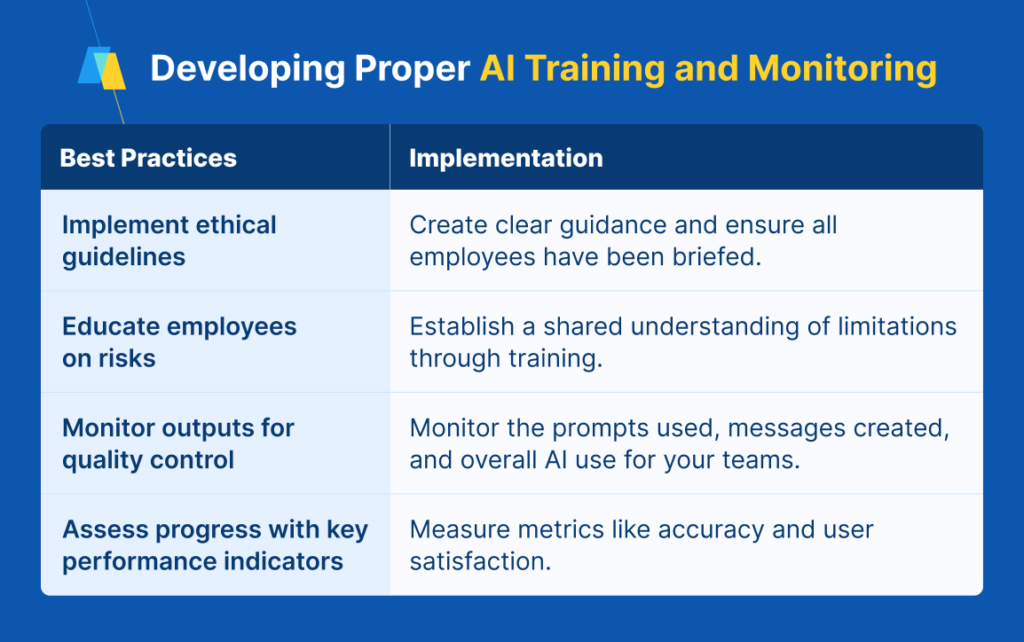

Developing Proper AI Training and Monitoring for Employees

Organizations must invest in comprehensive training and monitoring to instill accountability in AI systems among their employees and optimize their performance. Some best practices include:

- Implement ethical guidelines – Ethical frameworks set the tone for employees. You should create clear guidance and ensure all employees have been briefed.

- Educate employees on risks – Training establishes a shared understanding of limitations. The more your team understands the risks, the less likely they’ll make mistakes.

- Monitor outputs for quality control – Using key metrics, you should monitor the prompts used, messages created, and overall AI use for your teams. Oversight allows for catching errors and biases.

- Assess progress with key performance indicators – Measuring metrics like accuracy and user satisfaction provides visibility into areas needing refinement.

With rigorous governance, training, and monitoring, AI’s capabilities can be honed while safeguarding against missteps.

Responsible Oversight Unlocks AI’s Potential

The emergence of AI systems like ChatGPT marks an exciting new frontier of possibility. However, realizing the full potential of this technology requires cultivating a nuanced understanding of its limitations and establishing governance to ensure its responsible development.

TeamAI provides vital features that enable businesses to have oversight over their staff’s AI use in a ChatGPT-like workspace.

TeamAI allows you to:

- Monitor the messages and prompts used by employees, clients, or projects.

- Create an approved library of prompts and personas.

- Monitor the usage of individual staff members (how often are they using the tool).

TeamAI workspaces start a $5 a month.

Sign Up Today