DeepSeek stands out among the innovations in AI chatbots that have developed in the last several years. This chatbot created by a Chinese company has skyrocketed in popularity within months, outpacing top companies like OpenAI, the U.S.-based creator of ChatGPT.

DeepSeek has several attributes that make it stand out from competitors in terms of cost, performance, and size. It has several models offering distinct capabilities. If you’re looking to stay ahead in the AI industry, it’s worth knowing about this company and its products.

What is DeepSeek?

DeepSeek is an AI chatbot released by a Hanzhou-based Chinese startup. The controlling shareholder for this startup is Liang Wenfeng, the co-founder of an AI hedge fund called High-Flyer.

In a short time since its release, DeepSeek has overtaken ChatGPT as a top-rated free application. It stands out for being cheaper to use on some tasks and requiring less energy than the chatbot products created by Silicon Valley. It was also trained on inferior hardware and for much less money.

The source code for DeepSeek is open source, so anyone can use, modify, and share it. Other competing AI developers, such as OpenAI, create proprietary products, so this capability is a major draw.

DeepSeek is the result of a dedicated research lab for creating AI tools separate from the AI hedge fund product. The lab released its first chatbot in 2023.

However, the versions that have gained the most attention were those released near the end of 2024 and the beginning of 2025. The mobile app launch in late January 2025 caused major stock market disruptions.

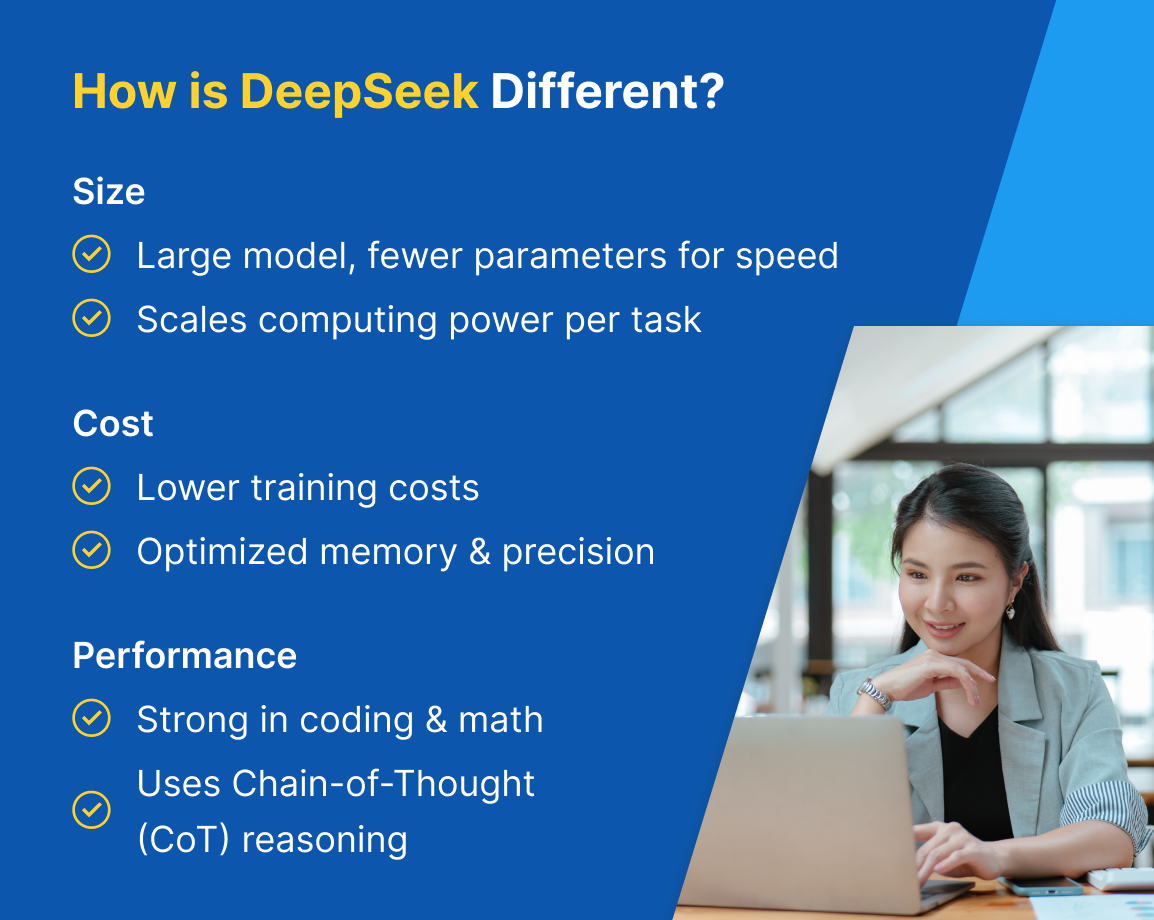

How is DeepSeek different than other AI models?

DeepSeek stands out in several ways from other AI models, including:

- Size: This model is larger than others but uses fewer parameters at a time to maintain its speed. It also scales its computing power depending on the task.

- Cost: The training costs for DeepSeek were much lower than those of competitors. The platform also uses various frameworks, depending on the memory and precision required, to reduce costs.

- Performance: Scores across coding and math in DeepSeek are comparable to those of the top products from U.S. AI developers. Additionally, the model uses innovative long Chain-of-Thought (CoT) reasoning to break complex tasks into smaller steps for better performance.

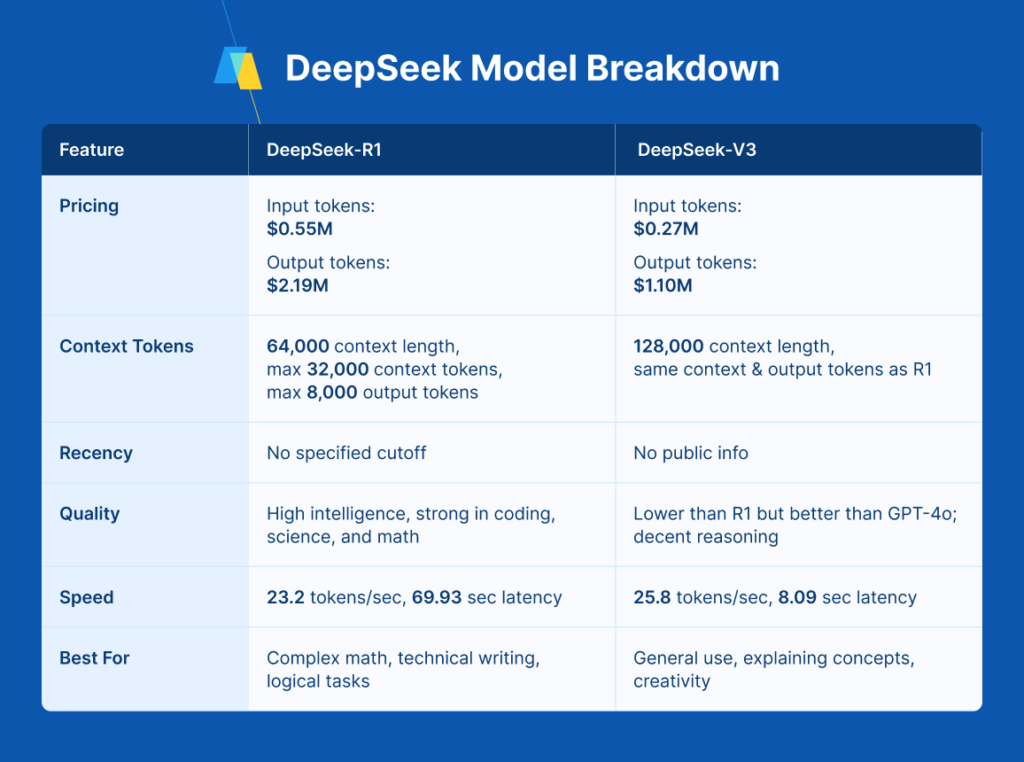

Comparing DeepSeek models

Like other AI developers, DeepSeek has released several models, each offering unique performance. Here’s an overview of how they compare to help you understand which will best suit your application.

DeepSeek-R1

DeepSeek-R1 is an advanced AI model and one of the most recent releases. It stands out for its logical thinking combined with high-speed processing. If you need an AI for niche tasks like complex math problems or technical writing, it is a powerful choice.

Here’s an overview of a few key details:

- Pricing: The standard pricing for this model is $0.55 per million input tokens and $2.19 per million output tokens. DeepSeek’s API has discount pricing for off-peak times.

- Content: DeepSeek has a context length of 64,000 tokens. Its maximum context tokens are 32,000, and its maximum output tokens are 8,000.

- Recency: DeepSeek has not specified its knowledge cutoff, so if you value the most recent information, you will need to seek elsewhere.

- Quality: This model brings high intelligence, comparable to OpenAI-o1. It performs well in coding, science, and mathematics tasks, with strong reasoning capabilities.

- Speed: This model outputs 23.2 tokens per second, making it somewhat slower than other top AI products. Its latency is 69.93 seconds.

DeepSeek-V3

DeepSeek-V3 is a more general-purpose design for efficiency and multitasking across numerous types of tasks. It stands out for its enhanced creativity compared to DeepSeek-R1. While it lacks understanding of more technical topics, it’s a great choice for explaining concepts in understandable language.

Discover its functionality in the following areas:

- Pricing: This model’s API costs $0.27 per million input tokens and $1.10 per million output tokens, making it more cost-effective than DeepSeek-R1. Like DeepSeek-R1, it has discounts for non-peak times.

- Content: The context tokens and max output tokens for this model are the same as those for DeepSeek-R1. It operates with a longer context length of 128,000 tokens.

- Recency: This model doesn’t have publicly available information on its knowledge cutoff.

- Quality: While its intelligence is lower than DeepSeek-R1, this model still delivers competitive capabilities, slightly better than those of GPT-4o. It has decent reasoning capabilities and performs quite well in coding and math — even better than GPT-4o, though not as well as DeepSeek-R1.

- Speed: The speed of DeepSeek-V3 is slower than average, with an output of 25.8 tokens per second. It has a latency of 8.09 seconds to receive the first token. Its output speed is slightly slower than DeepSeek-R1, while its latency is significantly lower.

Choosing the best model for your needs

DeepSeek has several AI models with varying functionalities and ideal use cases. DeepSeek-V3 is suitable for most general use cases as well as creative tasks, while DeepSeek-R1 is better for more complex tasks requiring specialized technical knowledge.

Beyond DeepSeek, many AI developers have created similar products, including OpenAI and Anthropic. In general, DeepSeek models stand out for their open-source capabilities. The design maximizes performance, even with less powerful hardware.

In contrast, OpenAI’s models are generally best for efficiency and handling a wide variety of tasks. Anthropic’s Claude models stand out for their focus on safety and their nuanced reasoning capabilities.

Learning the differences between developers and their products will help you make more informed decisions about which one is the best choice for your particular task or use in a certain department in your company.

Unite the best AI models in one workspace

While using multiple AI models brings benefits, it comes with challenges like managing various API keys. With TeamAI, you get multiple models in one convenient platform. We consistently add the latest versions as they’re released to keep you at the forefront of AI technology.

Our platform includes adaptive AI model capabilities to save your team time in researching each model. TeamAI’s Adaptive model automatically chooses the best solution based on your interaction. Gain confidence knowing you’re using the ideal model for every application.

Sign up for an account to try multiple models in one place.